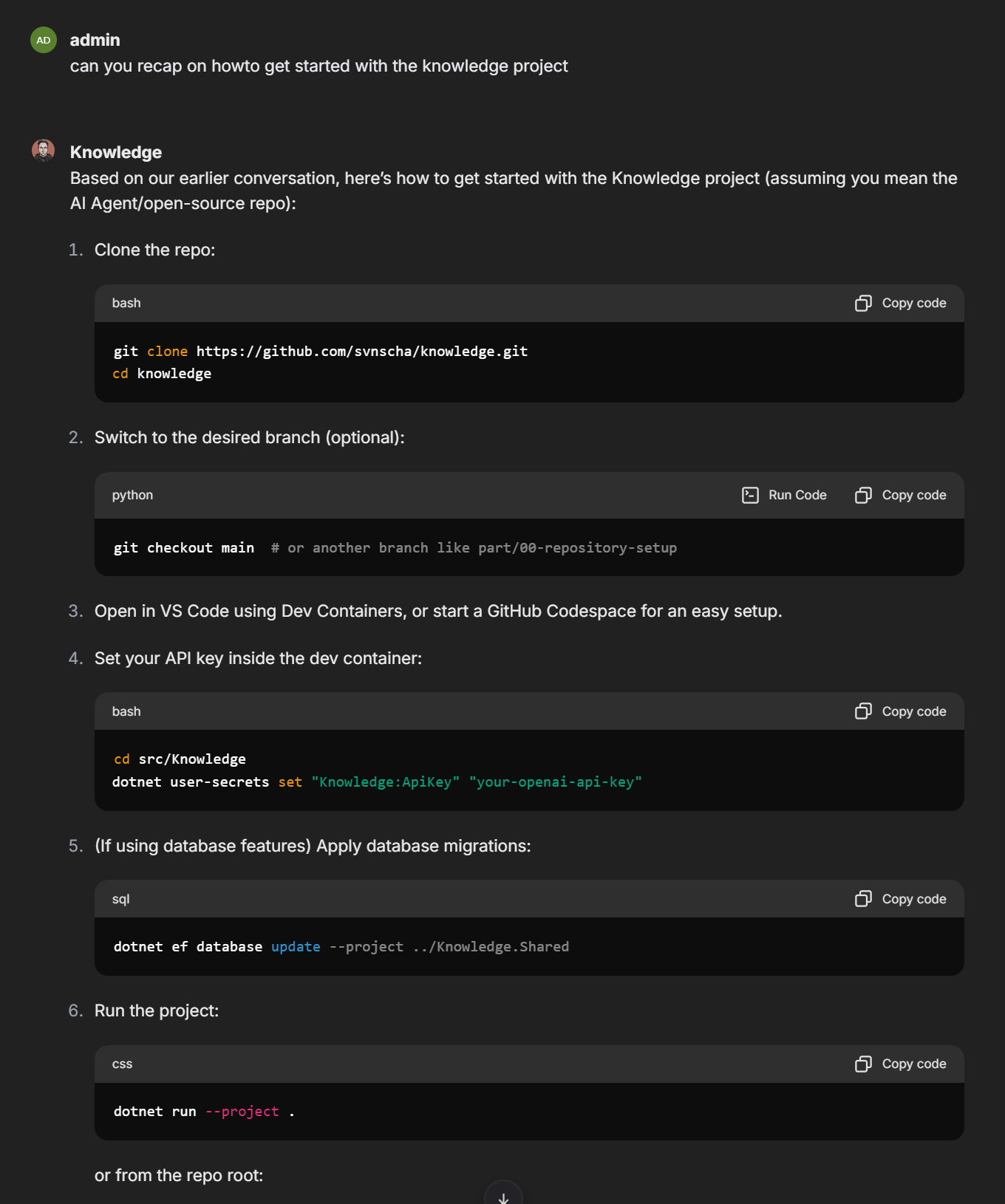

Welcome back to the AI Agents in .NET series. In the introduction, I built a basic conversational agent. In Part 1, I added database persistence, embeddings, and semantic search capabilities.

Today I'm breaking free from the built-in DevUI. Time to escape the developer dungeon.

Don't get me wrong - DevUI is fantastic for development and debugging.

Enter LibreChat - and my slightly chaotic journey to expose agents as OpenAI-compatible endpoints.

Spoiler alert: This post includes a fun debugging adventure where I thought I was done, deployed to LibreChat, patted myself on the back... and then watched everything break spectacularly. Tool-calling agents and downstream clients? Yeah, they don't play nice. The fix? A reusable middleware pattern that took me time to figure out. Learn from my pain - stay tuned for the ToolCallFilterAgent.

The Problem with DevUI

DevUI served me well. It let me test agents, inspect tool calls, and debug issues. But it has limitations:

- Single-purpose: It's a development tool, not a production interface

- No multi-user support: One conversation at a time

- Limited customization: You get what you get

For real-world use, I need something more flexible. And rather than building my own chat UI (been there, done that in early 2025, don't want to repeat), let's leverage existing open-source solutions.

Why LibreChat?

I mentioned LibreChat in my DGX Spark post, and I really enjoy it. Like, really like it.

It's:

- OpenAI API compatible: Speaks the same language as ChatGPT

- Self-hosted: Your data stays yours (take that, cloud overlords)

- Feature-rich: File uploads, conversations, presets, the whole shebang

- Beautiful: Actually looks like something you'd want to use - not like a developer accidentally vomited JSON onto a webpage

The key insight? LibreChat doesn't care what's behind the API. It just needs something that speaks OpenAI's protocol. And guess what the Microsoft Agent Framework already supports?

The Plan

Here's what I'm building:

%%{init: {"theme": "dark"}}%%

flowchart LR

LC[LibreChat] -->|OpenAI API| K[Knowledge API]

K -->|Agent Framework| A[Agents]

A --> KA[Knowledge]

A --> KSA[KnowledgeSearch]

A --> KTA[KnowledgeTitle]

KSA -->|Vector Search| PG[(PostgreSQL)]

LibreChat talks to our Knowledge API using the standard OpenAI chat completions format. Our API routes requests to our agents based on the model parameter. From LibreChat's perspective, it's just another OpenAI-compatible endpoint with multiple models to choose from.

Removing DevUI, Embracing Swagger

First things first - I'm removing the DevUI dependency and redirecting to Swagger for API exploration. DevUI is great for debugging, but for an API-first approach, Swagger makes more sense. Plus, Swagger has that nice "I'm a real API" energy.

The home page now redirects to Swagger:

app.;

Clean and simple. The API is now focused on being a backend service. Goodbye, training wheels.

Exposing OpenAI-Compatible Endpoints

The Agent Framework provides a beautiful MapOpenAIChatCompletions method that exposes agents as OpenAI-compatible endpoints. Each agent gets its own endpoint path. It's almost too easy (foreshadowing...).

I collect agent builders during registration and map them after building the app:

// Collect agent builders for endpoint mapping

var agentBuilders = ;

builder.;

var app = builder.;

// ... middleware setup ...

// Map OpenAI chat completions endpoint for each registered agent

foreach

Now each agent has its own endpoint:

/Knowledge/v1/chat/completions/KnowledgeSearch/v1/chat/completions

Pretty slick, right?

Setting Up LibreChat

Before I go further, let me get LibreChat running so I can test this integration. If you don't have LibreChat running yet, here's the quick setup. (If you do, feel free to skip ahead and judge my configuration choices.)

Docker Compose

The LibreChat Configuration

Create librechat.yaml - this is where we point LibreChat at our API:

version: 1.2.8

cache: true

endpoints:

custom:

- name: "Agent Framework"

apiKey: "not-used-but-required"

baseURL: "http://host.docker.internal:5000/v1"

models:

default:

fetch: false

titleConvo: false

summarize: false

forcePrompt: false

modelDisplayLabel: "Agent Framework"

iconURL: "https://svnscha.de/svnscha.webp"

Docker Compose Override

Create docker-compose.override.yml to mount the config:

services:

api:

volumes:

- type: bind

source: ./librechat.yaml

target: /app/librechat.yaml

extra_hosts:

- "host.docker.internal:host-gateway"

The extra_hosts line is crucial - it lets containers reach the host machine where the Knowledge API runs.

Fire It Up

Navigate to http://localhost:3080, create an account (it's local, don't worry), and you should see "Agent Framework" in the endpoint list.

But wait - if you select "Knowledge" and send a message, LibreChat hits /v1/chat/completions with "model": "Knowledge". My per-agent endpoints live at /Knowledge/v1/chat/completions instead. Houston, we have a routing problem.

The Model Routing Middleware

LibreChat (and most OpenAI clients) expect a single endpoint at /v1/chat/completions where the model parameter determines the underlying model but in this scenario it should determines routing:

I need middleware that reads the model from the request body and rewrites the path to the agent-specific endpoint. Nothing fancy, just some good old path mangling:

// Rewrite /v1/chat/completions to /{model}/v1/chat/completions based on request body

app.;

The key points:

- Enable buffering: I need to read the body to extract

model, then reset it for the actual handler - Path rewriting: Transform

/v1/chat/completions→/{model}/v1/chat/completions - Error handling: Return a proper OpenAI-style error if

modelis missing

Now when LibreChat sends a request, it gets routed to the right agent automatically. Back to LibreChat - select "Knowledge" from the dropdown, send a message, and... it works! Streaming responses, proper formatting, everything.

At this point, I thought I was done. Celebrated with a coffee. Time to test KnowledgeSearch...

The Tool Call Disaster (And How I Fixed It)

I switched to the KnowledgeSearch model in LibreChat, asked it to search for something, and... nothing. The request just hung. Then cancelled. No error message. No response. Just the cold, judgmental silence of broken software.

I dug through LibreChat's source code, traced the request flow, added logging everywhere. Console.WriteLine debugging like it's 2005.

Note: We should get to Observability very soon...

The Problem

My KnowledgeSearch agent uses tools - specifically the SearchConversationHistory function. When the agent executes a tool, the response stream includes FunctionCallContent and FunctionResultContent alongside the text. This is how the Agent Framework communicates "I'm calling a tool" and "here's what the tool returned."

But here's the thing - LibreChat receives this stream. It sees a function call. It thinks: "Oh, the model wants me to execute a function!" So it tries to find and execute that function. It can't (because the function lives in my backend, not LibreChat). So it gives up and cancels the request. Rude.

[Our Agent] → "I'll search for that" → FunctionCallContent{SearchConversationHistory}

[Our Agent] → [executes tool internally]

[Our Agent] → FunctionResultContent{results...}

[Our Agent] → "Based on the search, here's what I found..."

↓

[LibreChat] → Sees FunctionCallContent → "I need to call this function!"

[LibreChat] → Can't find function → Cancels request

↓

[User] → "Why isn't anything happening?" 😤

The frustrating part? You can't disable this behavior in LibreChat. It's doing the right thing for its use case - if it sends a function call to an upstream model, it expects to handle the response. But I'm the upstream model, and I've already handled my own tool calls. We're both right, and that's the most annoying kind of bug.

The Solution

Don't send tool call content downstream. Filter it out before it leaves the API. If LibreChat never sees the tool calls, it can't get confused by them. taps forehead

Meet ToolCallFilterAgent - a delegating agent that wraps any agent and strips FunctionCallContent and FunctionResultContent from responses. It's like a bouncer for your API responses:

/// <summary>

/// A delegating agent that filters out tool call content from responses.

/// This prevents downstream consumers from seeing FunctionCallContent and FunctionResultContent

/// that they cannot execute.

/// </summary>

public sealed

Now in the AgentFactory, I wrap KnowledgeSearchAgent:

public static AIAgent

The agent still uses tools internally, but clients only see the final text response. Clean and transparent. What happens in the backend stays in the backend.

Back to LibreChat - KnowledgeSearch now works! The agent searches, finds results, and responds - all without LibreChat ever knowing tools were involved. It's like magic, except it's just careful filtering.

Title Generation with KnowledgeTitleAgent

LibreChat has a feature called titleConvo - it automatically generates titles for conversations using the AI. But my main agents have tools and complex system prompts that are overkill for simple title generation. It's like using a flamethrower to light a candle.

The solution: a dedicated title agent. It's intentionally simple - no tools, no embeddings, just a focused system prompt:

/// <summary>

/// Simple agent for generating conversation titles.

/// No tools, no embeddings - just a basic helpful assistant.

/// Designed for use with LibreChat's title generation feature.

/// </summary>

public static

Register it alongside the other agents:

agentBuilders.;

Now update librechat.yaml to use the title agent:

version: 1.2.8

cache: true

endpoints:

custom:

- name: "Agent Framework"

apiKey: "not-used-but-required"

baseURL: "http://host.docker.internal:5000/v1"

models:

default:

fetch: false

titleConvo: true

titleModel: "KnowledgeTitle"

summarize: false

forcePrompt: false

modelDisplayLabel: "Agent Framework"

iconURL: "https://svnscha.de/svnscha.webp"

Now LibreChat uses KnowledgeTitle specifically for generating conversation titles, while Knowledge and KnowledgeSearch handle the actual conversations.

The Complete Program.cs

Here's what Program.cs looks like after all these changes:

using .;

using .;

using ..;

using ..;

using ...;

using ..;

using ;

var builder = WebApplication.;

// Collect agent builders for endpoint mapping

var agentBuilders = ;

builder.;

// Register background service for embedding processing

builder.Services.;

builder.Services.;

builder.Services.;

var app = builder.;

// Rewrite /v1/chat/completions to /{model}/v1/chat/completions based on request body

app.;

app.;

app.;

// Map OpenAI chat completions endpoint for each registered agent

foreach

app.;

app.;

app.;

That's it. No CORS configuration needed (LibreChat makes server-side requests). No custom request/response models. No manual streaming code. The framework does the heavy lifting - I just wire it up. Sometimes the best code is the code you don't have to write.

The key insights:

- Each agent gets its own endpoint via

MapOpenAIChatCompletions - Inline middleware handles routing from

/v1/chat/completionsbased onmodel - ToolCallFilterAgent hides internal tool execution from downstream clients

- KnowledgeTitleAgent handles title generation without tool complexity

LibreChat Test

Open LibreChat, select "Agent Framework" as the endpoint, choose a model from the dropdown (Knowledge, KnowledgeSearch, or KnowledgeTitle), and start chatting. You should see:

- Streaming responses (that satisfying typing effect)

- Tool calls working transparently (KnowledgeSearch uses tools, but you only see the results)

- Automatic title generation (thanks to KnowledgeTitle)

Architecture Overview

Let me step back and look at what I've built (and maybe pat myself on the back a little):

%%{init: {"theme": "dark"}}%%

flowchart TB

subgraph Clients

LC[LibreChat]

API[Direct API Calls]

end

subgraph "Knowledge API"

MR[Model Router Middleware]

E1["/Knowledge/v1/chat/completions"]

E2["/KnowledgeSearch/v1/chat/completions"]

E3["/KnowledgeTitle/v1/chat/completions"]

end

subgraph Agents

KA[Knowledge Agent]

KSA[KnowledgeSearch Agent]

KTA[KnowledgeTitle Agent]

TCF[ToolCallFilterAgent]

end

subgraph Storage

PG[(PostgreSQL)]

end

LC -->|"/v1/chat/completions"| MR

API --> MR

MR -->|"model: Knowledge"| E1

MR -->|"model: KnowledgeSearch"| E2

MR -->|"model: KnowledgeTitle"| E3

E1 --> KA

E2 --> TCF

TCF --> KSA

E3 --> KTA

KSA -->|Vector Search| PG

The flow is clean:

- Request comes in to

/v1/chat/completionswith"model": "KnowledgeSearch" - Middleware rewrites path to

/KnowledgeSearch/v1/chat/completions - Framework routes to the agent, which is wrapped in

ToolCallFilterAgent - Agent executes tools internally, filter strips tool content from response

- Client sees clean text output

Multiple clients, multiple agents, one simple routing pattern. It's almost... elegant?

Swagger is still available at /swagger for API exploration and testing.

Wrapping Up

I've taken the agents from a development-only DevUI to something that can serve real users through a proper chat interface. And I did it with surprisingly little code - plus one hard-won debugging lesson that I'm still a bit salty about.

The key insights from this journey:

MapOpenAIChatCompletionsdoes the heavy lifting - one line per agent, full OpenAI compatibility- Each agent gets its own endpoint - clean separation, easy to test directly

- Simple inline middleware handles routing - LibreChat sends to

/v1/chat/completions, I rewrite to/{model}/v1/chat/completions - Tool-calling agents need filtering - the

ToolCallFilterAgentpattern is essential when exposing agents to downstream clients that don't understand your internal tool calls. - Dedicated agents for specific tasks -

KnowledgeTitleAgentfor titles,KnowledgeSearchfor search,Knowledgefor general chat

What's Next?

I've got a solid foundation now - persistence, embeddings, semantic search, a proper UI, and automatic title generation. But there's more to explore:

- Observability: OpenTelemetry, Jaeger, understanding what's happening at scale

- Agent Loop: Exploring the magic behind automated AI Agents

- Authentication: Proper API key validation for production deployments (because "meh, whatever" isn't a security strategy)

But first, I'm letting this settle. Play with LibreChat, see how your agents perform with real conversations, and notice what's missing. The best features come from actual use - not from staring at code and imagining what users might want.

See you in the next post. 🚀

The companion repository has been updated with the part/02-connect-librechat branch containing all the code from this post. Check it out on GitHub.